A Look at Lightning AI

What is Lightning AI (and Where Does Grid.ai Fit With It)?

By Femi Ojo, Senior Support Engineer Lightning AI

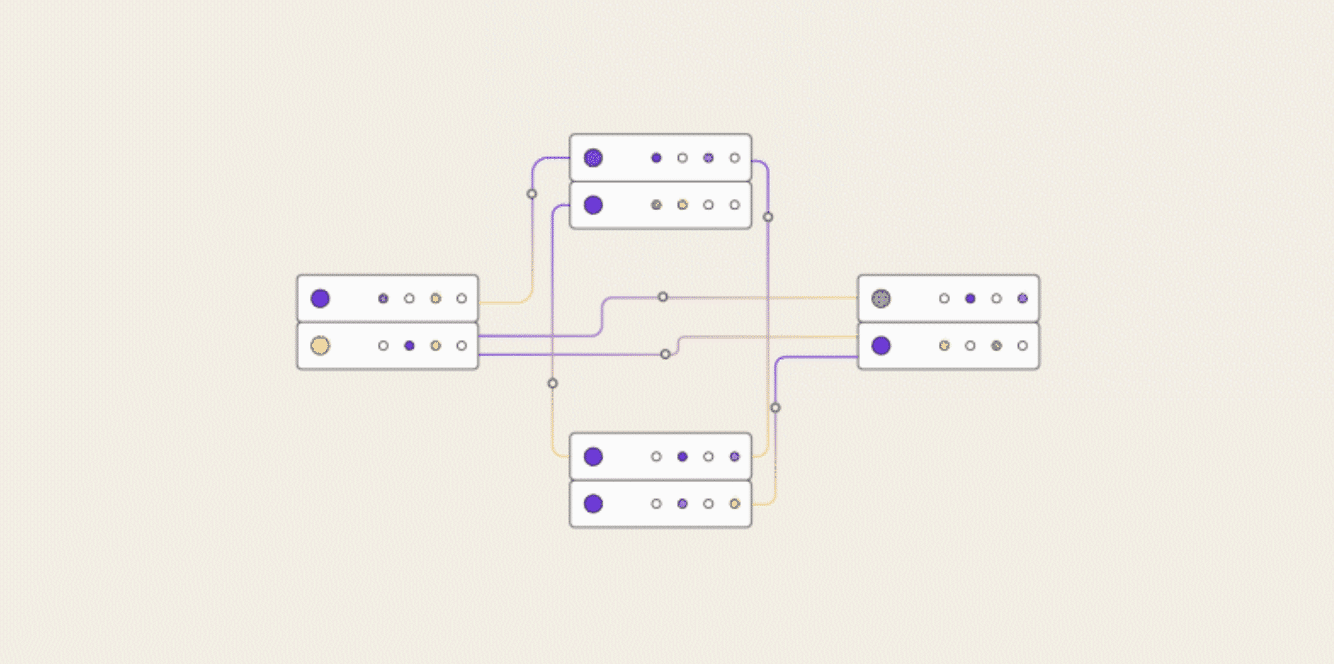

Lightning is a free, modular, distributed, and open-source framework for building Lightning Apps where the components you want to use interact together.

How is Lightning AI Different from Grid.ai?

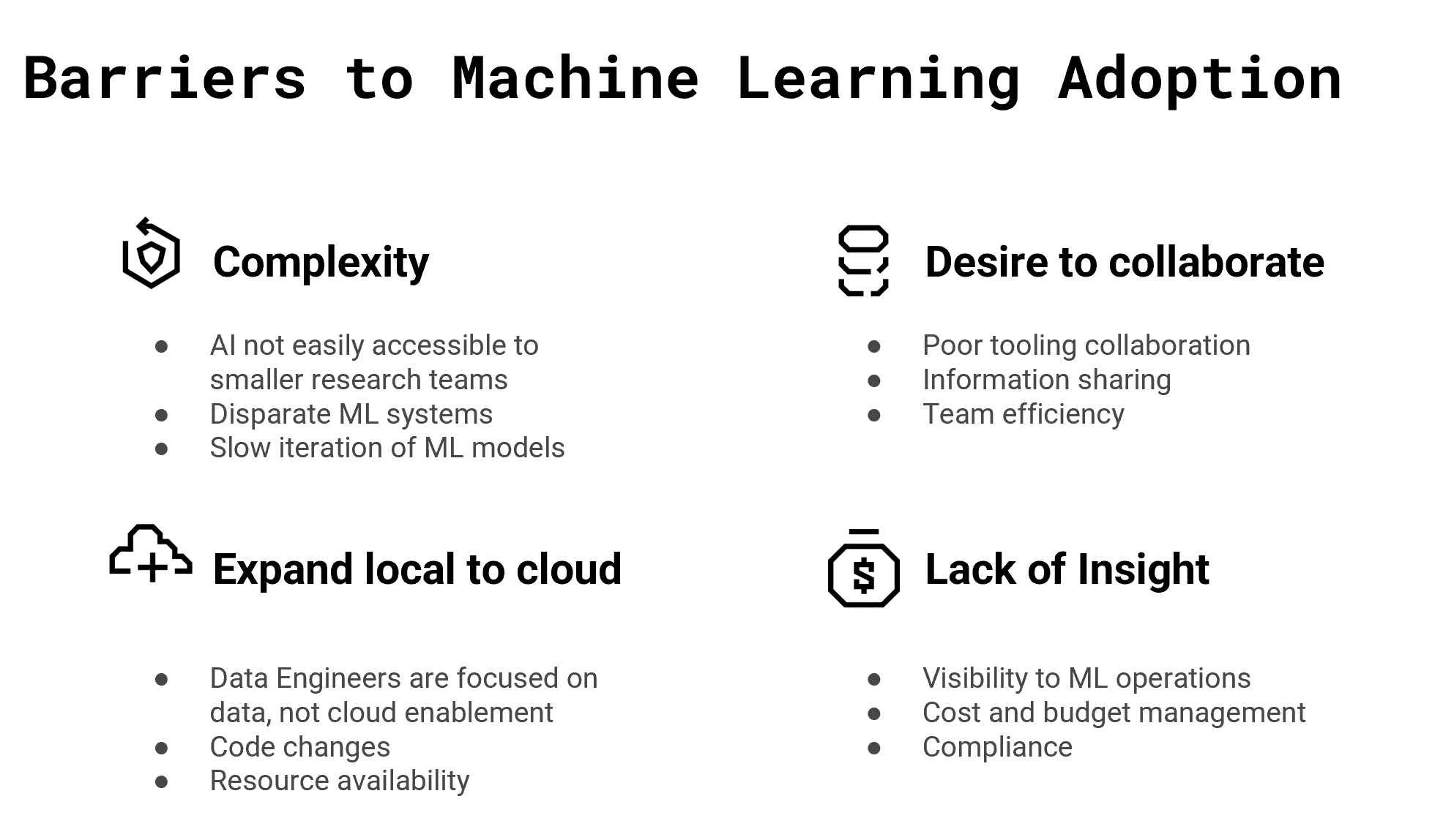

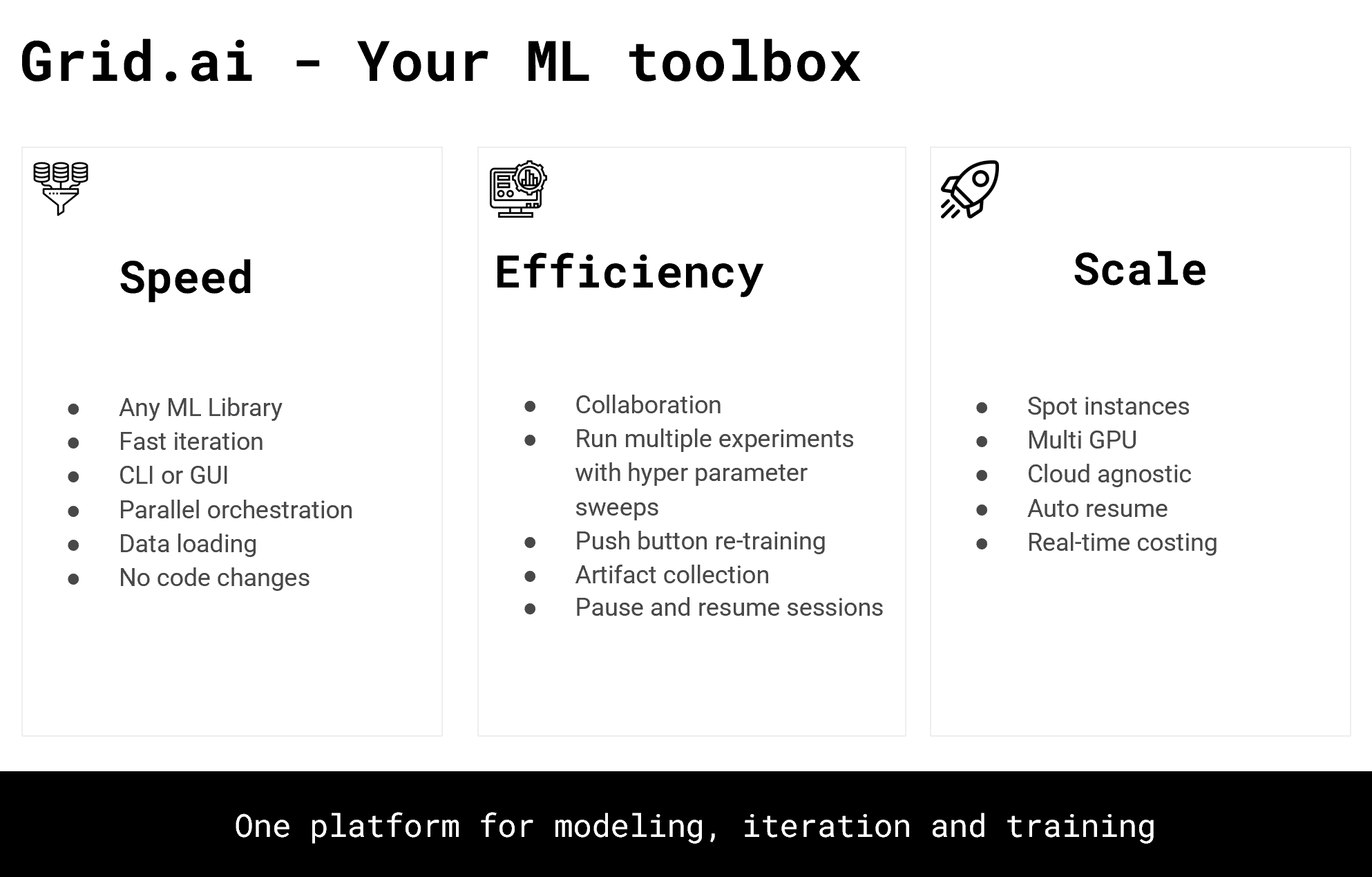

Lighting AI is the evolution of Grid.ai. The Grid platform enables users to scale their ML training workflows and remove all the burden of having to maintain or even think about cloud infrastructure. Lightning AI takes advantage of a lot of things Grid.ai does well, in fact Grid.ai is the backend that powers Lightning AI. Lightning AI builds upon Grid.ai by expanding further into the world of MLOps, helping to facilitating the entire end-to-end ML workflow. That is how powerful the framework is. It is a platform for ML practitioners by ML practitioners and engineers.

By design Lightning AI is a minimally opinionated framework to guard developers against unorganized code and is flexible enough to build cool and interesting AI applications in a matter of days depending on complexity. It is truly a product made for engineers and creatives and built by engineers and creatives.

So, What’s So Great About Lightning AI?

Lightning Apps! Lightning Apps can be built for any AI use case, ranging from AI research to production-ready pipelines (and everything in between!). By abstracting the engineering boilerplate, Lightning AI allows researchers, data scientists, and software engineers to build highly-scalable, production-ready Lightning Apps using the tools and technologies of their choice, regardless of their level of engineering expertise.

The current problem today is that the AI ecosystem is fragmented, which makes building AI slower and more expensive than it needs to be. For example, getting a model into production and maintaining it takes hundreds, if not thousands, of hours spent maintaining infrastructure. Lightning AI solves this by providing an intuitive user experience for building, running, sharing, and scaling fully functioning Lightning Apps. A nice consequence of this is it will now only take days (not years) to build AI applications.

Here are a few cool things you can do with Lightning Apps:

- Integrate with your choice of tools – TensorBoard, WanDB, Optuna, and more!

- Train models

- Serve models in production

- Interact with Apps via a UI

- Many more apps to come as we and the community collaborate to make the Lightning Apps experience one to remember

Lightning Apps Gallery

Along with the concept of Lightning Apps, Lightning introduces the Lightning Gallery. The Gallery is the community’s one-stop-shop for a diverse set of curated applications and components. The value of the app and component galleries are endless and only limited by the developer’s imagination. For example, there could be components for:

- Model Training

- Model Serving

- Monitoring

- Notification

Using only these 4 components a fully qualified MLOps pipeline can be built. For example, an anomaly detection app with the following characteristics could be built from them:

- Model training component – Train model

- Model deployed to production – Model detects an anomaly

- Monitoring component – Data drift detected and then triggers a model update

- Notification component – Notifies interested parties of the detected anomaly

Gallery Examples

Prior to launching Lightning as a product we thought about the need to have some existing apps to give the community a flavor of what can be created and showcase how easy it is.

Components (Building blocks)

- PopenPythonScript and TracerPythonScript – Enable easy transition from Python scripts to Lightning Apps. See our How to Move a Grid Project to Lightning Apps tutorial for an example.

- ServeGradio – Enables quick deployment of an interactive UI component.

- ModelInferenceAPI – Enables quick prototyping of model inferencing.

Applications (Building)

- Train & Demo PyTorch Lightning – This app trains a model using PyTorch Lightning and deploys it to be used for interactive demo purposes. This is not meant to be a real-time inference deployment app. There are other apps with real-time inference components that can be used to achieve < 1 ms inference times. This is a great app to use as a starting point for building a more complex app around a PyTorch Lightning model.

- Lightning Notebook – Use this app to run many Jupyter Notebook on cloud CPUs, and even machines with multiple GPUs. Notebooks are great for analysis, prototyping, or any time you need a scratchpad to try new ideas. Pause these notebooks to save money on cloud machines when you’re done with your work.

- Lightning Sweeper (HPO) – Train hundreds of models across hundreds of cloud CPUs/GPUs, using advanced hyperparameter tuning strategies.

- Collaborative Training – This app showcases how you can train a model across machines spread over the internet. This is useful for when you have a mixed set of devices (different types of GPUs) or machines that are spread over the internet that do not have specialized interconnect between them. Via the UI you can start your own training run or join others via a link! The app will handle connecting/updating and monitoring of your training job through the UI.

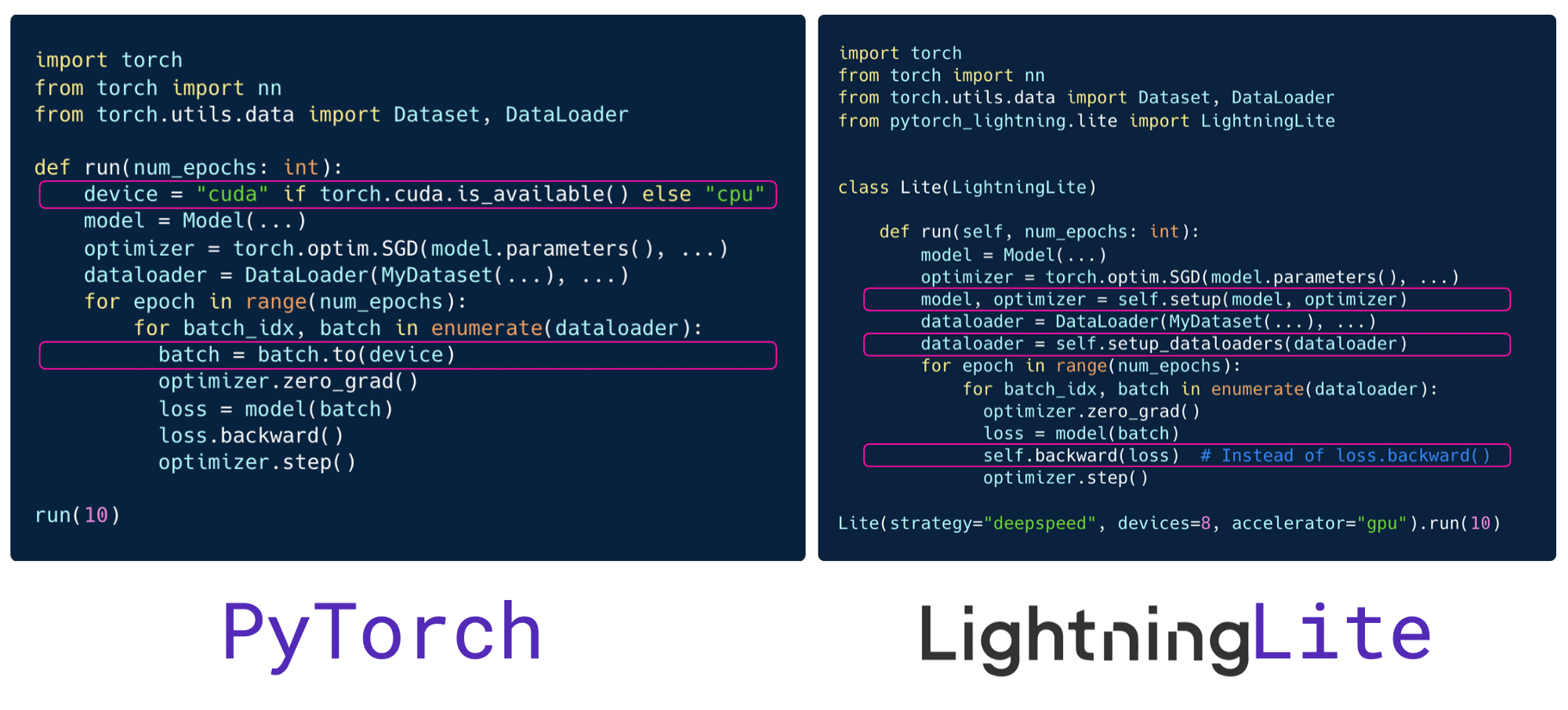

Convert Modeling Code into Apps

To convert your code to a Lightning App is a seamless task today with some of our convenient components. Both the PopenPythonScript and TracerPythonScript components are near-zero code change solutions for converting Python code to Lightning Apps! See here for all the code changes required to convert this official PyTorch Lightning image classification model to a Lightning App with the TracerPythonScript component.

Future Roadmap

A great future lies ahead of Lightning AI and we are excited to share you a list of enhancements to come! Within the next months – year we plan to enable the following:

- Allow users to locally run multiple apps in tandem – This will be great for users that have multiple Lightning Apps they are testing and developing.

- Multi-tenancy apps – This will allow the community to deploy Lightning Apps to their favorite cloud provider.

- App built-in user authentication – It is important for the community to be able to protect access to their Lightning Apps. This is a step in the right direction to making Lightning Apps more secure.

- Hot-reload

- Pause and resume Lightning App – Some users will be using Lightning Apps that host an interactive environment like Jupyter Notebooks. For such users we want to remove all pain points related to losing work and being billed when a machine is idle.

We welcome the community to build Lightning Apps that meet their needs and share in our open source gallery ecosystem!

Get started today. We look forward to seeing what you #BuildWithLightning!