Creating Datastores

Overview of Datastores

To speed up training iteration, you can store your data in a Grid Datastore. Datastores are high-performance, low-latency, versioned datasets. If you have large-scale data, Datastores can resolve blockers in your workflow by eliminating the need to download the large dataset every time your script runs.

Datastores can be attached to Runs or Sessions, and they preserve the file format and directory structure of the data used to create them. Datastores support any file type, with Grid treating each file as a collection of bytes which exist with a particular name within a directory structure (e.g. ./dir/some-image.jpg).

Why Use Datastores?

Data plays a critical role in everything you run on Grid, and our Datastores create a unique optimization pipeline which removes as much latency as possible from the point your program calls with open(filename, 'r') as f: to the instant that data is provided to your script. You’ll find traversing the data directory structure in a Session indistinguishable from the experience of cd-ing around your local workstation.

- Datastores are backed by cloud storage. They are made available to compute jobs as part of a read-only filesystem. If you have a script which reads files in a directory structure on your local computer, then the only thing you need to change when running on Grid is the location of the data directory!

- Datastores are a necessity when dealing with data at scale (e.g., data which cannot be reasonably downloaded from an HTTP URL when a compute job begins) by providing a singular & immutable dataset resource of near unlimited scale.

In fact, a single Datastore can be mounted into tens or hundreds of concurrently running compute jobs in seconds, ensuring that no expensive compute time is wasted waiting for data to download, extract, or otherwise “process” before you can move on to the real work.

A couple of notes:

- Grid does not charge for data storage.

- In order to ensure data privacy & flexibility of use, Grid never attempts to process the contents of the files or infer/optimize for any particular usage behaviors based on file contents.

How Data is Accessed in a Datastore?

By default, Datastores are mounted at /datastores/<datastore-name>/ in both Runs and Sessions. If you need the mount path at a different location, you are able to manually specify the Datastore mount path using the CLI.

How to Create Datastores

Datastores can be created from a local filesystem, public S3 bucket, HTTP URL, Session, and Cluster.

Local Filesystem (i.e. Uploading Files from a Computer)

There are a couple of options when uploading from a computer depending on the size of your dataset.

Small Dataset

You can use the UI to create Datastores for datasets smaller than 1GB (files or folder). When Datastore sizes are greater than 1GB, you’ll reach the browser limit for uploading data. In these situations, you should use the CLI to create Datastores.

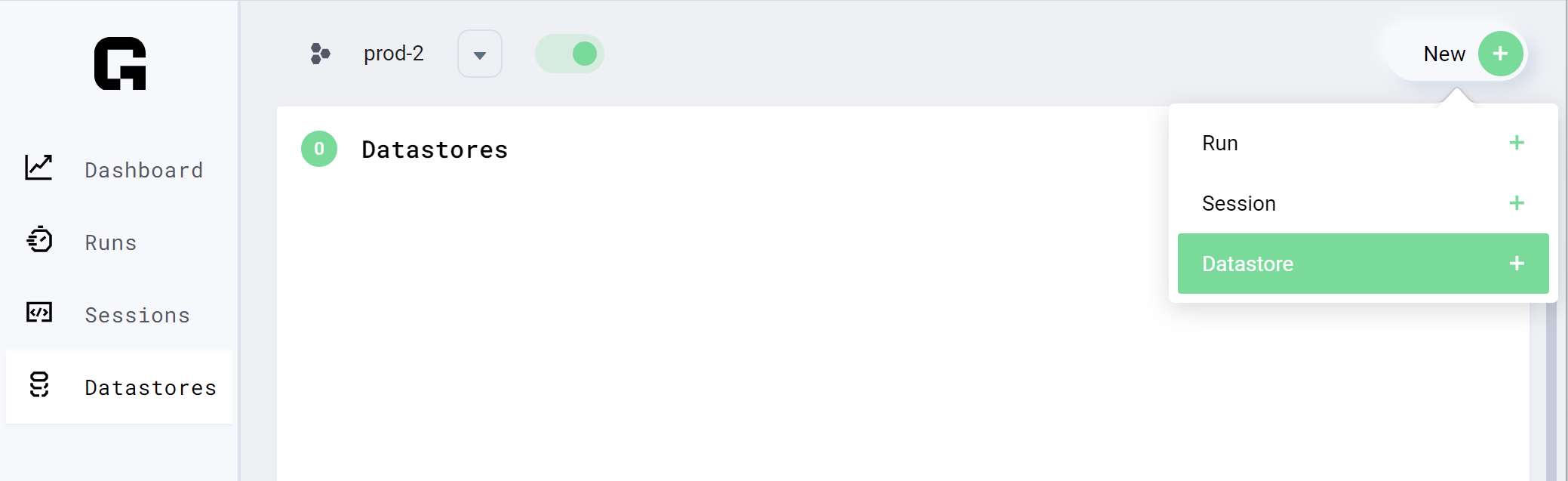

From the Grid UI, you can create a Datastore by selecting the New button at the top right where you can then choose the Datastore option.

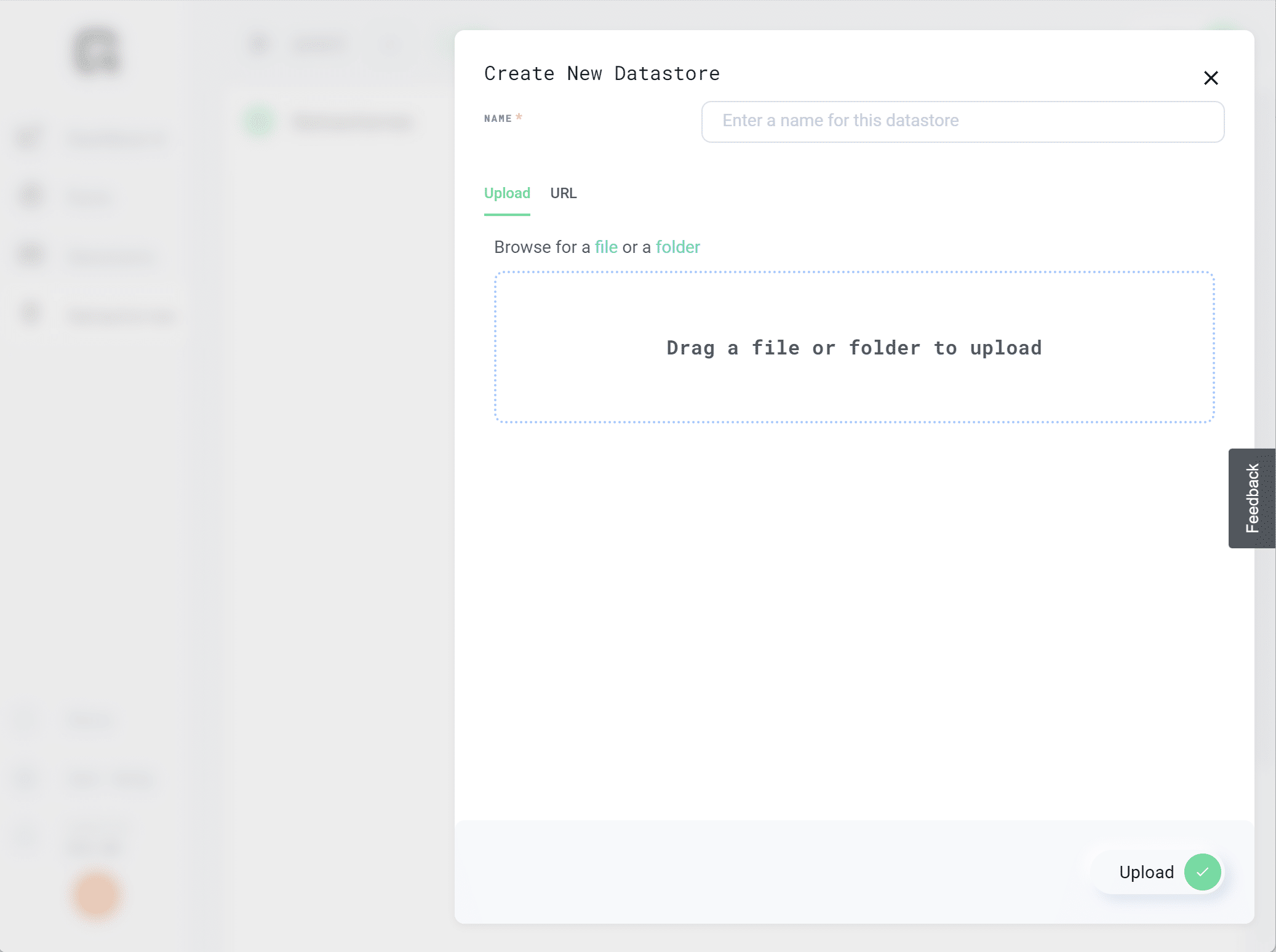

The Create New Datastore window will open and you will have the following customization options:

- Name

- Options to upload a dataset or link using a URL

To upload a dataset under 1GB, select the file or folder and click upload, or drag and drop it into the box.

When you have finished with your customizations, select the Upload button at the bottom right to create your new Datastore.

Large Datasets (1 GB+)

For datasets larger than 1 GB, you should use the CLI (although the CLI can also be used on small datasets just as easily!).

First, install the grid CLI and login:

pip install lightning-grid --upgrade grid login

Next, use the `grid datastore` command to upload any folder:

grid datastore create --name imagenet ./imagenet_folder/

This method works from:

- A laptop.

- An interactive session.

- Any machine with an internet connection and Grid installed.

- A corporate cluster.

- An academic cluster.

Create from a Public S3 Bucket

Any public AWS S3 bucket can be used to create Datastores on the Grid public cloud or on a BYOC (Bring Your Own Credentials) cluster by using the Grid UI or CLI.

Currently, Grid does not support private S3 buckets.

Using the UI

Click New –> Datastore and choose “URL” as the upload mechanism. Provide the S3 bucket URL as the source.

Using the CLI

In order to use the CLI to create a datastore from an S3 bucket, we simply need to pass an S3 URL in the form s3://<bucket-name>/<any-desired-subpaths>/ to the grid datastore create command.

For example, to create a Datastore from the ryft-public-sample-data/esRedditJson bucket we simply execute:

grid datastore create s3://ryft-public-sample-data/esRedditJson/

This will copy the files from the source bucket into the managed Grid Datastore storage system.

In this example, you’ll see the --name option in the CLI command was omitted. When the --name option is omitted, the datastore name is assigned the name of the last “directory” making up the source path. So, in the case above, the Datastore would be named “esredditjson” (the name is converted to all lowercase ASCII non-space characters).

To use a different name, simply override the implicit naming by passing the --name option / value parameter explicitly. For example, to create a Datastore from this bucket named “lightning-train-data” use the following command to execute:

grid datastore create s3://ryft-public-sample-data/esRedditJson/ --name lightning-train-data

Using the –no-copy Option via the CLI

In certain cases, your S3 bucket may fit one (or both) of the following criteria:

- the bucket is continually updating with new data which you want included in a Grid Datastore

- the bucket is particularly large (leading to long Datastore creation times)

In these cases, you can pass the --no-copy flag to the grid datastore create command.

Example:

grid datastore create S3://ruff-public-sample-data/esRedditJson --no-copy

This allows you to directly mount public S3 buckets to a Grid Datastore, without having Grid copy over the entire dataset. This offers better support for large datasets and incremental update use cases.

When using this flag, you cannot remove files from your bucket. If you’d like to add files, please create a new version of the Datastore after you’ve added files to your bucket.

If you are using this flag via the Grid public cloud, then the source bucket should be in the AWS us-east-1 region or there will be significant latency when you attempt to access the Datastore files in a Run or Session.

Create from an HTTP URL

Datastores can be created from a .zip or .tar.gz file accessible at an unauthenticated HTTP URL. By using an HTTP URL pointing to an archive file as the source of a Grid Datastore, the platform will automatically kick off a (server-side) process which downloads the file, extracts the contents, and sets up a Datastore file directory structure matching the extracted contents of the archive.

Using the UI

Click New –> Datastore and choose “URL” as the upload mechanism. Provide the HTTP URL as the source.

From the CLI

In order to use the CLI to create a datastore from an HTTP URL, we simply need to pass a URL which begins with either http:// or https:// to the grid datastore create command.

For example, to create a datastore from the the MNIST training set at: https://datastore-public-bucket-access-test-bucket.s3.amazonaws.com/subfolder/trainingSet.tar.gz we simply execute:

grid datastore create https://datastore-public-bucket-access-test-bucket.s3.amazonaws.com/subfolder/trainingSet.tar.gz

In this example, you’ll see the --name option in the CLI command was omitted. When the --name option is omitted, the Datastore name is assigned from the last path component of the URL (with suffixes stripped). In the case above, the Datastore would be named “trainingset” (the name is converted to all lowercase ASCII non-space characters).

To use a different name, simply override the implicit naming by passing the --name option explicitly. For example, to create a datastore from this bucket named “lightning-train-data” use the following command to execute:

grid datastore create https://datastore-public-bucket-access-test-bucket.s3.amazonaws.com/subfolder/trainingSet.tar.gz --name lightning-train-data

Create from a Session

For large datasets that require processing or a lot of manual work, we recommend this flow:

- Launch an Interactive Session

- Download the data

- Process it

- Upload

When you are in the interactive Session, use the terminal multiplexer Screen to make sure you don’t interrupt your upload session if your local machine is shut down or experiences network interruptions.

# start screen (lets you close the tab without killing the process) screen -S some_name

Now do whatever processing you need:

# download, etc... curl http://a_dataset unzip a_dataset # process do_something something_else bash process.sh ...

When you’re done, upload to Grid via the CLI (on the Interactive Session):

grid datastore create imagenet_folder --name imagenet

The Grid CLI is auto-installed on sessions and you are automatically logged in with your Grid credentials.

Note: If you have a Datastore that is over 1GB, we suggest creating an Interactive Session and uploading the Datastore from there. Internet speed is much faster in Interactive Sessions, so upload times will be shorter.

Create from a Cluster

Grid also allows you to upload from:

- A corporate cluster.

- An academic cluster.

First, start screen on the jump node (to run jobs in the background):

screen -S upload

If your jump node allows a memory-intensive process, then skip this step. Otherwise, request an interactive machine. Here’s an example using SLURM:

srun --qos=batch --mem-per-cpu=10000 --ntasks=4 --time=12:00:00 --pty bash

Once the job starts, install and log into Grid (get your username and ssh keys from the Grid Settings page).

# install pip install lightning-grid --upgrade # login grid login --username YOUR_USERNAME --key YOUR_KEY

Next, use the Datastores command to upload any folder:

grid datastore create ./imagenet_folder/ --name imagenet

You can now safely close your SSH connection to the cluster (the screen will keep things running in the background).

And that’s it for creating Datastores in Grid! You can check out other Grid tutorials, or browse the Grid Docs to learn more about anything not covered in this tutorial.

As always, Happy Grid-ing!