How to Move a Grid Project to Lightning Apps

The Evolution of Grid.ai

By Femi Ojo, Senior Support Engineer Lightning AI

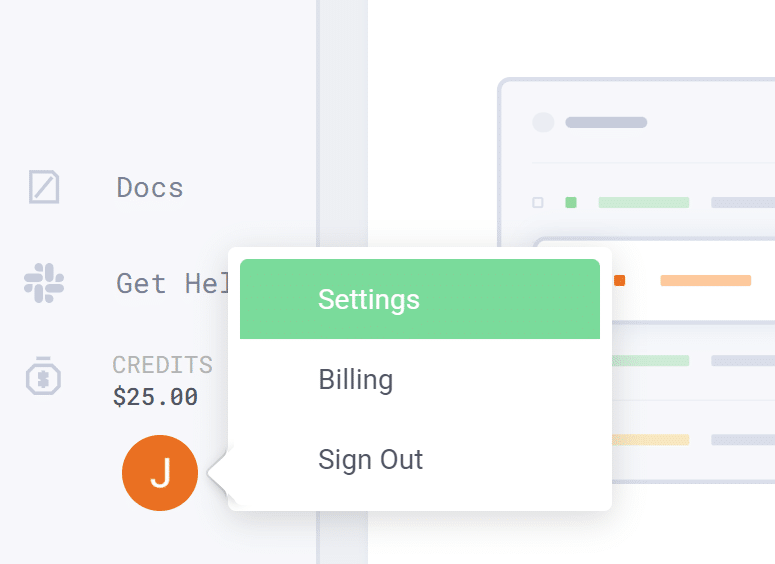

Along with the launch of its flagship product Lightning, Grid.ai has rebranded to Lightning AI. This name change allows us to unify our offerings and enables us to expand support for additional products and services as we look to a future that makes it easier for you to build AI solutions.

Prior to the new launch, Grid.ai was maintaining popular open source projects like PyTorch Lightning, PyTorch Lightning Bolts, and PyTorch Lightning Flash. This made the connection between Grid.ai and PyTorch Lightning projects a bit unclear. We felt that rebranding to Lightning as an organization would make it a lot easier for the community to understand the relationship between all of our product offerings.

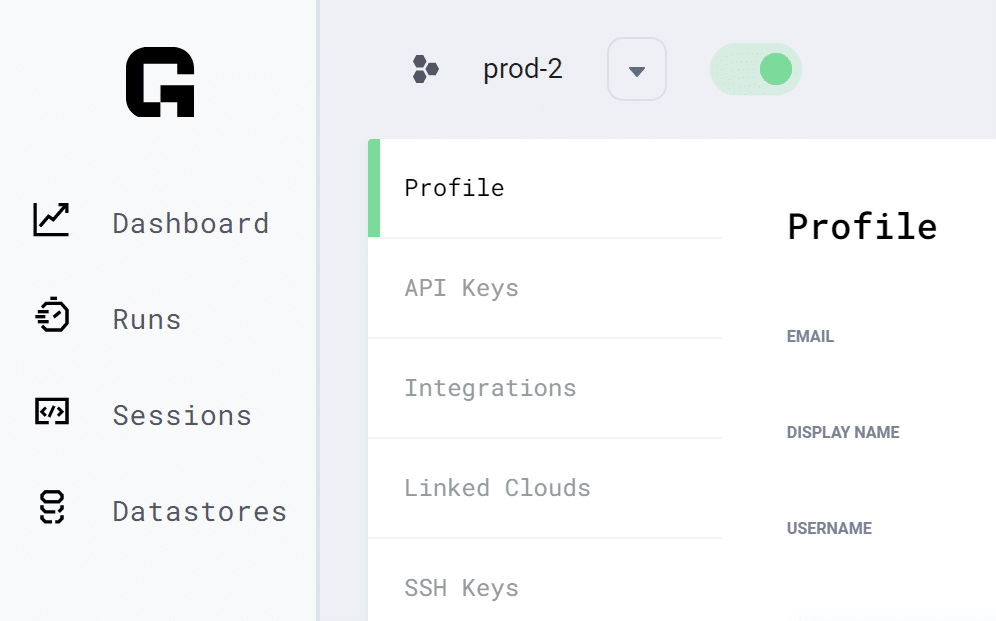

For the time being, this rebranding of our corporate identity only affects our name if you use Grid; both the Grid.ai website and the Grid platform will remain unchanged. However, we highly recommend you check out the new Lightning.ai website and learn how you can use Lightning Apps to accelerate your research.

What this Means for You

Fret not, we carefully thought about the implications this has on your workflows. To this end we have spent time ensuring that transitioning from Grid.ai to Lightning Apps is a low-barrier task. The source executable script is the entry point for both Lightning Apps and Grid. However, due to the app-based nature of Lightning Apps, we have to relax our zero-code change promise to a near-zero code change promise. This relaxation allowed us to build more flexibility and freedom into the Lightning product. The shift from Grid to Lightning Apps is a simple 2 step process:

- Convert existing code to a Lightning App.

- Add a

--cloudflag to your CLI invocation and optionally make minor parameter additions to customize your cloud resources.

We will discuss both of these steps in turn.

Converting Your Code to a Lightning App

To convert your code to a Lightning App is a seamless task today with some of our convenient components. Both the PopenPythonScript and TracerPythonScript components are near-zero code change solutions for converting Python code to Lightning Apps!

Below is an example of how to do this with an image classifier model taken from the official Lightning repository.

Imports

from lightning_app.storage import Path from lightning_app.components.python import TracerPythonScript import lightning as L

The imports above seem simple but they are powerful and are pretty much all the core components to building a Lightning App. So let’s dive into each one of the Lightning modules.

- TracerPythonScript – This component allows one to easily convert existing .py files into a LightningWork. It can take any standalone .py script and convert it to the object Lightning is expecting. Even if your script requires script arguments like you normally pass with a CLI, TracerPythonScript is able to handle it.

- L – Is the convention for creating idiomatic Lightning Apps. From it we will import all the core features of Lightning.

- LightningWork – LightningWorks are the building blocks for all Lightning Apps. A LightningWork is optimized to run long running jobs and integrate third-party services.

- LightningFlow – LightningFlows are the managers of LightningWorks and ensure proper coordination among them. A LightningFlow runs in a never ending while loop that constantly runs checking the state of all its LightningWork components and updates them accordingly.

- LightningApp – this is essential to running any Lightning App. It is the entry point to instantiating the app.

- Path – To ensure proper data access across LightningWorks we’ve introduced the Path object. Lightning’s Path object works exactly like Pathlib.Path, enabling seamless drop-in replacements.

Define the Root Flow

Now that you’ve gotten introduced to some core features of Lightning let’s dive more into the actual code.

class CifarApp(L.LightningFlow):

def __init__(self):

super().__init__()

script_path = Path(__file__).parent / "scripts/cifar_baseline.py"

self.tracer_python_script = TracerPythonScript(script_path)

def run(self):

if not self.tracer_python_script.has_started:

self.tracer_python_script.run()

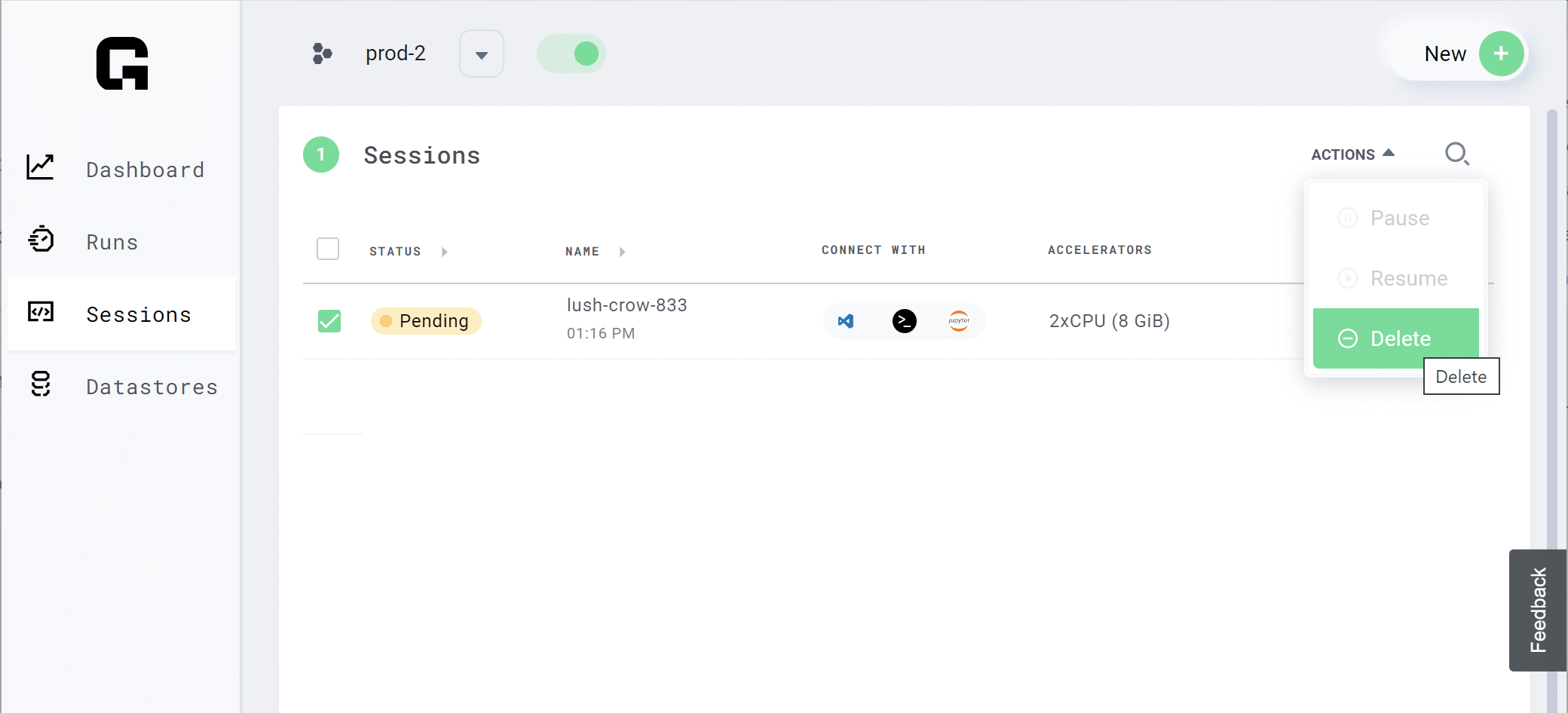

Here we defined our root flow. Believe it or not this is as complicated as it gets to shift from something like a Grid Run to a Lightning App. There are also components out there to emulate the Grid Session environment in the Component Gallery but that is touched upon in our Intro to Lightning blog post, so be sure to check it out.

- Every object that inherits from a LightningFlow or LightningWork (not introduced here) needs to have

super().__init_()in its init method. script_path– This is the path to your executable script.self.tracer_python_script– This is the work that your flow is going to run.self.has_started– This is a built in attribute of a LightningWork that is used as a flag to ensure the work only invokes the script one time.def run– This is an obligatory definition that is called when you run the app.

Finally, it’s time to reveal the last line needed to have a fully functioning Lightning App.

Fully Forming the Lightning App

app = LightningApp(CifarApp())

As stated before, LightningApp is the entrypoint when you run lightning run app app.py. It takes a flow object as its parameter argument.

See here for all the code changes required to do this with the TracerPythonScript component.

Testing Locally

To test your Lightning App locally all you have to do is run the following command from the directory containing your app.

lightning run app <filename>.py

Notice here that the file name can be anything for running a Lightning App. However, it is considered idiomatic for the filename to be app.py.

Shifting to Cloud

Shifting to the cloud is as simple as adding a --cloud to your CLI invocation when you deploy the application. As a complete example to execute our CIFAR training code on the cloud this is what we would run.

lightning run app app.py --cloud

A few things will happen to users that choose this option.

- All your LightningWorks will be run on a separate cloud instance.

- By default, Lightning will place your works on the least powerful CPU offered by Lightning AI, free of cost.

- The compute used by Lightning is customizable into tiers. These are specified via the CloudCompute argument. This is explained in the documentation below.

For more advanced or customized deployments see our Docker + requirements documentation and our customizing cloud resources documentation.

Note: At this time not all the features supported in Grid are supported in Lightning Apps. We encourage you to read the Intro to Lightning blog post to learn more about what features are on the roadmap to be supported.

Get started today. We look forward to seeing what you #BuildWithLightning!